Learning about Adversarial Perturbations

December 1, 2018

Adversarial perturbations are special kinds of distortions that trick machine learning and deep learning models into misclassifying their input. Adversarial examples in neural networks were first discovered and explored by Szegedy et al. (2013) in their paper: Intriguing properties of neural networks. They showed that distortions or perturbations can be learnt from a model, which when applied to its input, cause the model to confidently misclassify the input.

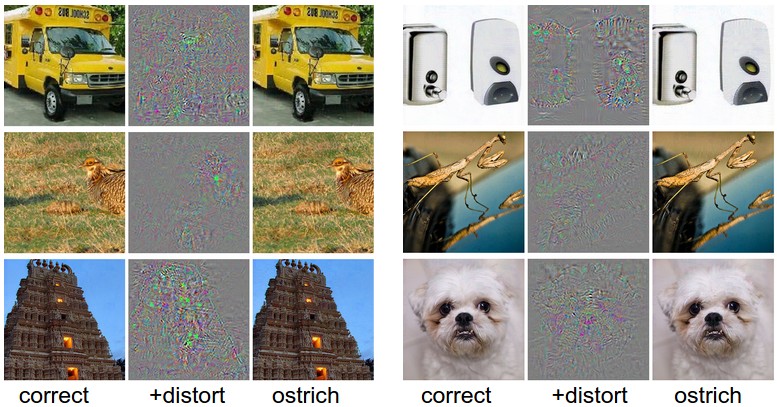

Take a correctly classified image (left image in both columns), and add a tiny distortion (middle) to fool the ConvNet with the resulting image (right). Taken from http://karpathy.github.io/2015/03/30/breaking-convnets/.

Adversarial examples are input that have been perturbed. Adversarial training is a technique for improving the defense of a deep learning model against adversarial perturbations by incorporating adversarial examples during model training.

Recently, as part of my academic coursework, I chose to review a paper titled: DeepFool: a simple and accurate method to fool deep neural networks. The crux of the paper is the introduction of an algorithm called DeepFool that is effective at finding the minimal perturbations necessary to fool DNNs. Furthermore, the perturbations that are learnt using DeepFool are more effective for adversarial training compared to a pre-existing method called Fast Gradient Sign Method (FGSM). In order to get up to speed with adversarial learning, I found these sources especially helpful:

- Tricking Neural Networks: Create your own Adversarial Examples

- Lecture 16 | Adversarial Examples and Adversarial Training By Ian Goodfellow

- Intriguing properties of neural networks

- Explaining and Harnessing Adversarial Examples

- Universal adversarial perturbations

- Foolbox: A Python toolbox to benchmark the robustness of machine learning models